Cloudera Data Platform

Update solution on March 3, 2021

The Cloudera Data Platform (CDP) is effectively a convergence of the Hadoop platforms that were previously offered by Cloudera and HortonWorks individually. The new Cloudera has adopted the open source principle previously advocated by HortonWorks in that everything within the CDP platform is now available with an open source license where previously some Cloudera products were treated as proprietary. The company has also adopted a cloud-first development process whereby new features are first available in the cloud (AWS or Azure) and only subsequently for on-premises implementations.

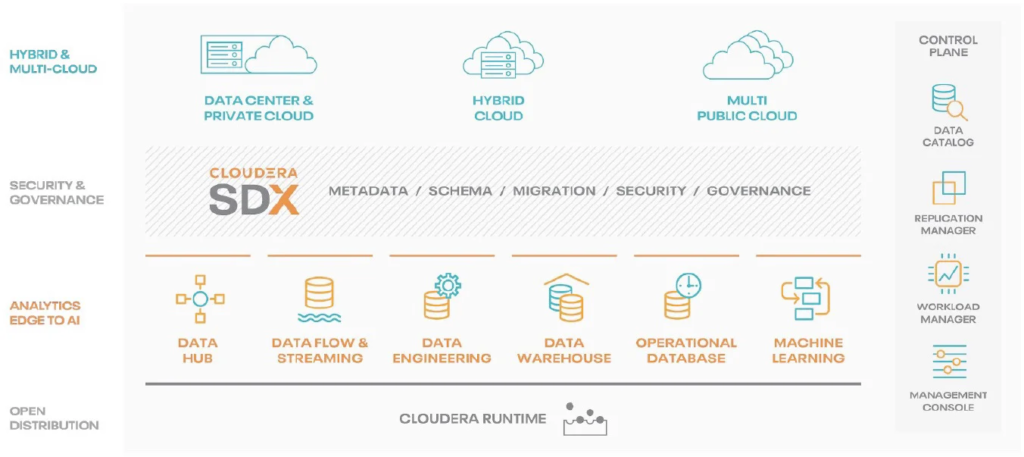

Fig 01 – The Cloudera Data Platform

Figure 1 is a marketecture diagram showing the capabilities provided by CDP. The whole environment involves more than 30 different open source (Apache) projects, especially in the security and governance and analytics layers, but it would be tedious to call each of these out by name. Note that the Cloudera Data Warehouse represents a use for CDP but is not otherwise discussed here.

Customer Quotes

“Real-time analytics and scalability are factors imperative to the sustainable growth of BSE. This will ensure that our critical systems are future proof so we can continue to enable the industry by building capital market flows. Cloudera meets our custom requirement by providing us with industry-standard technology and infrastructural expertise that has helped us deploy the highest number of references with the lowest total cost of ownership among vendors.”

Bombay Stock Exchange

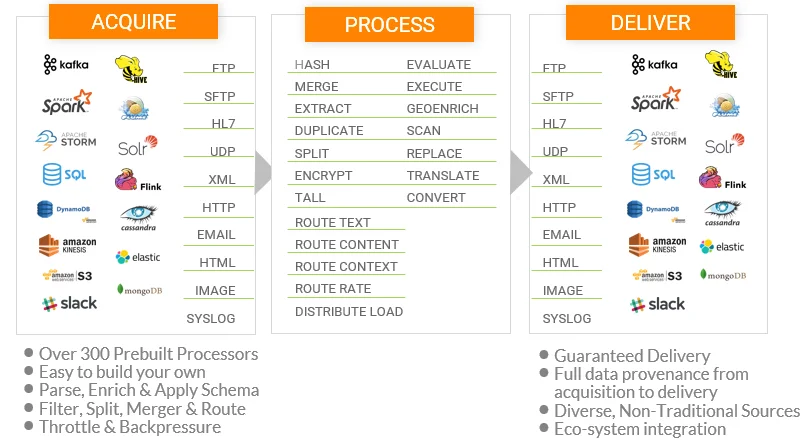

For data ingestion, stream processing, real-time streaming analytics and large-scale data movement into the data lake or cloud stores, CDP’s DataFlow capabilities powered by Apache NiFi, MiNiFi, Kafka, Flink or Spark Streaming are ideal. They enable the journey of data-in-motion from the edge to the cloud or the enterprise through an integrated platform. ELT (extract, load and transform) is supported with Apache NiFi powering the extract and load parts, while Hive and Tez or Spark provide the Transform part. Traditional ETL is also possible though the majority of CDP use cases are at a much higher-scale of volume and velocity than traditional ETL. Figure 2 provides an illustration of the sorts of capabilities provided.

Fig 02 – The CDP integration environment and functions

As far as data quality is concerned, Clouder’s approach is that you land your data in your data lake, curate it using some third party data preparation tool, and then move it into your data warehouse if appropriate. Thus, while data cleansing transformations are available there is no available data quality tool per se. On the other hand, CDP includes Cloudera SDX (shared data experience), which includes the Hive Metastore, Apache Ranger for security, Apache Atlas for governance and Apache Knox for single sign-on. Atlas is especially worth calling out because it provides a metadata repository for assets within the enterprise. That is, details about the assets derived from use of technologies such as Hive, Impala, Spark, Kafka and so on. More than 100 of these are supported out of the box and there is support to allow you to define additional asset classes. Underneath the hood a graph database is used to store asset definitions and instances, and the graph-based nature of the product allows you to explore relationships between different asset classes, and to support data lineage. It also supports the classification of assets and these can be linked to glossary terms to enable easier discovery of assets. The ability to apply policies to assets is enabled by attaching tags to columns that propagate through lineage and are then applied automatically to all derived tables. This is especially when it comes to such activities as masking sensitive data. Atlas integrates with Apache Ranger to enable classification based access control.

In addition to the metadata repository provided by Atlas, Cloudera also offers the Cloudera Data Catalog. This is intended for data stewards and end users to browse, curate and tag data. It provides a single pane of glass for data security and governance across all deployments with the notable exception (currently) that it is limited to data stored within the Cloudera environment.

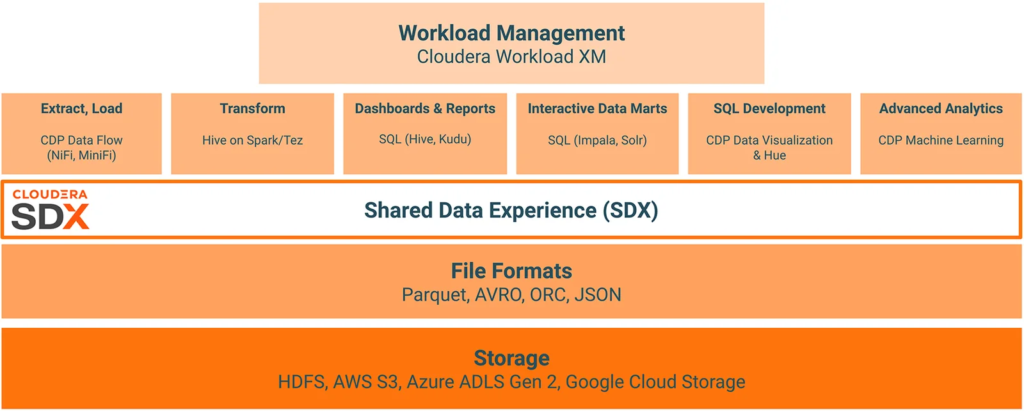

The major reason for adopting CDP is that it is all open source and it supports a very wide range of such technologies, many of which are not widely supported by other vendors. For example, relatively few other suppliers support Apache Flink or Sqoop. In addition, CDP forms the underlying platform for implementing the Cloudera Data Warehouse as either a data warehouse or lake. In this context see Figure 3, which we are presenting to illustrate that Cloudera is not just about Hadoop: note the support for object storage, Apache Druid and so on.

Fig 03 – Cloudera Data Warehouse within the broader CDP environment

Going into specifics we particularly like the way that Apache Atlas enables governance policies to be defined once and then applied automatically across all relevant tables. Conversely, we would like to see more automation built into the platform. Machine learning has been implemented to support workload management but otherwise it is primarily a roadmap item at present. Cloudera is clearly on the right track here but, being purists, we would like to see it go faster!

The Bottom Line

Readers need to let go of the idea of equating Cloudera with Hadoop. Cloudera is a general-purpose, data and database oriented organisation that is focused on open source as well as the public, hybrid and private cloud. That will be a powerful argument in its favour for many people.

Related Company

Connect with Us

Ready to Get Started

Learn how Bloor Research can support your organization’s journey toward a smarter, more secure future."

Connect with us Join Our Community