Attunity

Last Updated:

Analyst Coverage: Daniel Howard

Attunity is a provider of data management and data integration solutions. It was originally founded as ISG International Software Group in 1988 but changed its name to Attunity in 2000. It boasts more than 2000 global customers, 44 of whom are part of the Fortune 100, as well as a wide array of technology and consulting partners, including Microsoft, Amazon, Hortonworks, Cloudera and Snowflake. Attunity has regional headquarters in the United States, the UK, and Hong Kong. The company also has offices in Israel, France, Germany, and Sweden.

Attunity Compose

Last Updated: 12th February 2019

Attunity Compose is in principle a data warehouse automation solution. However, this description is slightly misleading, as there are two distinct versions of Compose: Compose for Data Warehouses and Compose for Data Lakes. In both cases, they are designed to automatically create analytics-ready data structures for their respective targets, and they may be used separately or together. When used in conjunction, this allows you to store data in a data lake before exposing it via data marts.

Attunity Replicate is another important product in the Attunity suite, particularly in concert with Compose. It provides automated data delivery, replication and ingestion to and from an impressively large range of data targets and sources, including data warehouses, data lakes, mainframe and the cloud (Amazon, Microsoft Azure, Google Cloud). Additional features include a modern, drag and drop user interface; zero performance footprint on data sources; and Change Data Capture, the ability to capture changes to data sources in real-time. Attunity Replicate feeds source metadata updates to Compose-managed data lake and data warehouse targets on a real-time basis.

Finally, Attunity also offers Attunity Enterprise Manager, which offers automated task and data flow management as well as reporting and performance monitoring, and Attunity Visibility, an analytics platform for data warehouses and data lakes. The former integrates and shares metadata across heterogeneous environments that include Compose-enabled data pipelines.

Customer Quotes

“We were looking for a data warehouse automation tool with a visual and model-based approach, as well as one that would reduce the need for our it team to do large amounts of coding themselves. Attunity compose is that solution. It has saved and will continue to save us many hours of labor.”

Poly-Wood

“A Fortune 500 global insurance firm used Attunity to cut 45 days of ETL coding to 2, reduced implementation costs 80%, and accelerated data warehouse updates to once monthly from twice annually.”

Compose for Data Warehouses is a data warehouse automation solution. It allows you to create and populate data warehouses and data marts in a highly automated fashion using a variety of wizards. Compose can help you automate every part of the data warehouse lifecycle, as shown in Figure 1. Moreover, options for customisation are available at every step, from creating your data model to populating your data marts. A variety of visualisation tools are provided, allowing you to graphically view, for example, the structure of your data model. This is all done without any manual coding or scripting.

Compose for Data Lakes, on the other hand, is designed to let you create a governed data lake providing curated and analytics-ready data. Curation in this case could refer to standardisation, formatting, and subsetting, among other things. There are two stages to this: first, Compose will merge all incoming ingested data into a continuously updated historic data store; second, it will allow you to provision and enrich that data before exposing it for further use. The historic data store retains a full change history and can replay this history at any time. In addition, Compose maintains a centralised ‘mini’ data catalogue for your data lake. This is not intended to be used as a standalone catalogue – although that’s certainly possible – but to support dedicated data cataloguing products by feeding your metadata to them via a REST API.

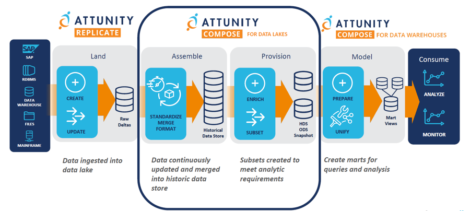

Having created and curated your data lake, Attunity offers a variety of options for utilising the data within it. For starters, Attunity offers specialised solutions for integrating Compose with Apache Hive, as well as Amazon RedShift. Moreover, Compose for Data Lakes is also compatible with Compose for Data Warehouses. This forms part of an architectural pattern, as seen in Figure 2, that allows you to use the combination of Replicate and both versions of Compose to ingest data into a data lake, curate and provision it, then expose it for consumption in a selection of data marts.

This architecture extends the advantages, such as a high degree of automation, offered by Replicate and Compose to the entirety of your data pipeline. Notably, all three constituent products update continuously, the former via Change Data Capture and the latter two via the historic data store, which is kept continuously updated. This means that the whole of your data pipeline will continuously synchronise with its data source to keep your data marts up-to-date, with all the benefits that that implies with respect to timely business insights.

There are two major selling points for Attunity Compose. The first is common to all competent data warehouse automation products, and it’s in the name: automation. The ability to automate most or even all of the legwork that goes into creating a data warehouse, for example specifying the data model, or creating ETL instructions, is a large saving in both time and effort.

The second is decidedly less common and is Compose’s ability to create data lakes populated with fresh, curated data that is ready for analytics, then expose that data, either via data marts or to an analytics platform directly. This provides two very significant benefits. First of all, it provides substantial data lake management capabilities. Secondly, by providing analytics-ready data, it allows any analytics tools to provide far more relevant and timely insights. Combining these two advantages allows you to leverage big data within your analytics tools quickly and effectively, without time for your data or your insights to become stale. This is a significant differentiator for Attunity, particularly given the continuing popularity of data lakes.

The Bottom Line

Attunity Compose for Data Warehouses is a data warehouse automation solution that is fast and easy to use. Compose for Data Lakes brings many of the same advantages to the data lake, as well as providing its own in the form of data lake management and governance. Together, they let you take advantage of both big data and data warehousing, allowing you to shepherd data from your data lakes and into data marts. In all three of these use cases, Compose is a product worth your consideration.