Data Quality

Analyst Coverage:

Data quality is about ensuring that data is fit for purpose; that it is accurate, timely and complete enough relative to the use to which it is put. As a technology, data quality can either be applied after the fact or in a preventative manner.

Data quality products usually cover a range of areas including data profiling, data cleansing. merge/matching (discovering duplicated records) and data enrichment (adding, say, geocoding, postal codes or assorted business data like credit data to records).

Data quality also provides one of the sets of functionality required for data governance and master data management (MDM). Some data quality products have specific capabilities to support, for example, data stewards and/or facilities such as issue tracking.

Data quality products provide tools to perform various automated or semi-automated tasks that ensure that data is as accurate, up-to-date and complete as you need it to be. This may, of course, be different for different types of data: you want your corporate financial figures to be absolutely accurate but a margin of error is probably acceptable when it comes to mailing lists.

Data quality provides a range of functions. A relevant tool might simply alert you that there is an invalid postal code and then leave you to fix that; or the software, perhaps integrated with a relevant ERP or CRM product, might prevent the entry of an invalid post code altogether, prompting the user to re-enter that data. Some functions, such as adding a geocode to a location, can be completely automated while others will almost always require manual intervention to a degree. For example, when identifying potentially duplicate records the software can do this for you, and calculate the probability of a match, but it may require a business user or data steward to actually approve the match. Vendors are increasingly using artificial intelligence to help here by training expert systems to observe the actions of human domain experts, and then improve their suggestions based on these observations.

Poor data quality can be very costly indeed and there have been numerous studies examining, and proving, this point. The CFO should care. Conversely, good data quality ensures that your information about your customers is as complete and as accurate as it can be, which means, as we move more into a world of one-to-one marketing, that the CMO will also be interested in data quality. For companies that recognise that data is a corporate asset then data quality will be important for line of business managers and everybody up to the CEO level.

Further, data quality is of particular importance for compliance officers and data governance (which overlap) and CIOs. We discuss the relevance to compliance in the section on emerging trends but for CIOs data quality is important in a number of technical environments such as data migration, where poor data quality can adversely affect the success of the project and extend both costs and duration. This also applies to data warehousing where unsuccessful or delayed implementations have frequently been ascribed to poor data quality.

In recent years the rise of machine learning has meant that AI techniques can be used to improve automation, by observing how human domain experts resolve issues, and then automatically suggesting new business rules based on these observations. Many data quality products now use such techniques, and with the rise in public awareness of large language model AIs such as ChatGPT, most vendors put claims of machine learning prominently in their marketing material. The actual degree to which machine learning is used in software rather than on PowerPoint sales slides varies substantially from vendor to vendor.

Data quality software will be around as long as human beings enter data into computer systems, but certainly the recent advances in machine learning have given an opportunity for vendors to significantly up their game when it comes to automating data quality processes.

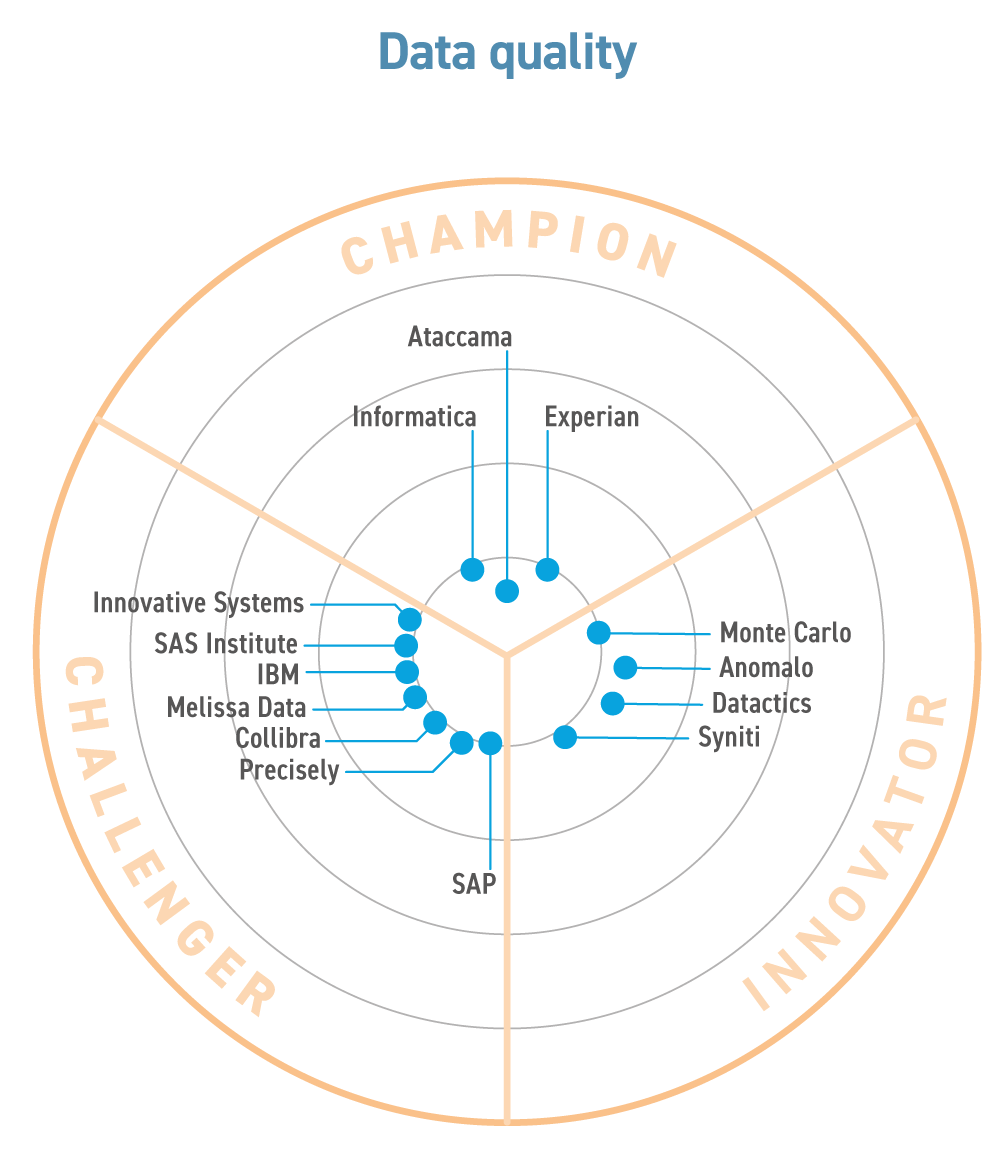

The data quality market is mature and there has been little change over the last several years, though some merges and acquisition activity. One notable feature has been that a number of smaller companies, such as Uniserv and Ataccama, have emerged as credible suppliers from non-English speaking environments.

In general, the market is split between those companies that focus purely on data quality and those that also offer either ETL (extract, transform and load) functionality or MDM (master data management) or both. Some of these “platforms” have been built from the ground up, while some others consist more of disparate elements that have been bolted together. There is also a distinction between those that can provide specialist facilities for product matching (which is more complex than name and address matching), and those that cannot. Many data quality products still focus on customer name and address data, since that is common to almost every company and organisation.

Related Blog

- Viamedici – major new software version

- Ataccama unveil new AI agent feature

- Aim (Data Solutions)

- Syniti data governance

- SAS Viya continues to develop

- Syniti update

- Redpoint – Data quality for the customer experience

- Innovative Systems update

- Datactics Data Quality Update

- Informatica Data Quality Offering

- Experian data quality update

- SAS Data Quality Update

- DataRush extends its boundaries

Related Research

- Data management strategies for financial services

- Master Data Management (2025)

- Informatica MDM

- SAP Master Data Governance (2025)

- Stibo – STEPping out

- Data Preparation Challenges in Healthcare and Voracity from IRI

- Anomalo – Data Quality Monitoring

- Solix – Data Governance from Archiving to AI

- RAGs to Riches?

- Viamedici

- Monte Carlo – Don’t Gamble With Data Quality

- Data Quality with Informatica

- Data Quality with Innovative Systems

- Collibra Data Quality and Observability (March 2024)

- Data quality strategy through Datactics (2024)

Related Companies

Connect with Us

Ready to Get Started

Learn how Bloor Research can support your organization’s journey toward a smarter, more secure future."

Connect with us Join Our Community