Ataccama and Data Fabric

Update solution on February 23, 2024

What is it?

Ataccama has its roots in the data quality market, which it later expanded to master data management and data governance. It has a data catalogue, a core component of a data fabric architecture, where data assets are mapped and represented to business users in some form, such as a knowledge graph, that will show business users the data landscape in some form of semantic layer rather than a physical structure. A user might be interested in something like “customer”, which may be actually stored in several underlying systems such as ERP, a sales force automation system and perhaps some marketing systems too. Because Ataccama’s core strength has been data quality and master data management, it is well positioned to present a clear representation of data to the customer. Their technology already has comprehensive data quality capabilities that can be applied at source. It also has the necessary survivorship rules within its master data management hub to derive a “golden copy” of a customer record from the multiple versions that may exist out in the source systems.

Mutable Award: Gold 2024

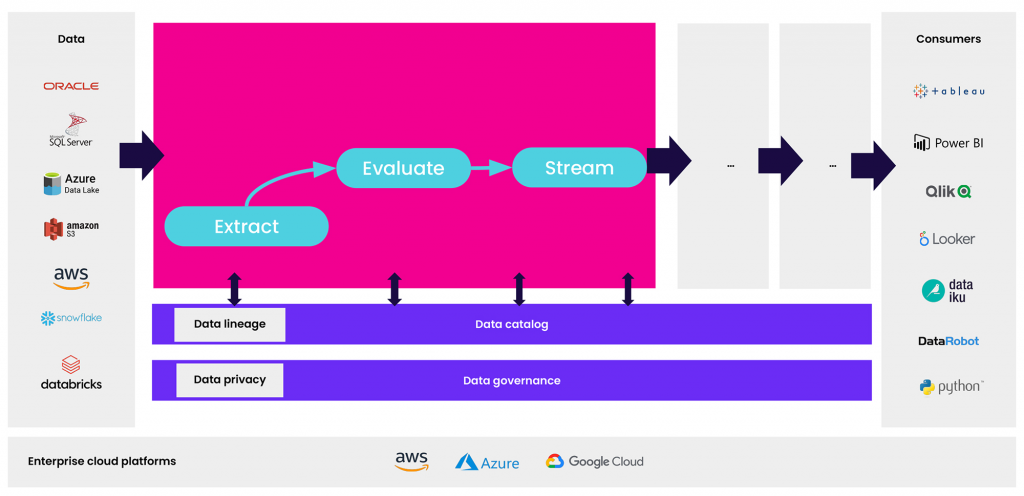

Fig 2- Ataccama ONE AI-powered data management platform

The Ataccama software goes beyond this though. It has an active metadata layer that can access the metadata of underlying source applications like SAP or SalesForce, and can detect changes in these and update its catalogue automatically. In this way the catalogue remains current. Furthermore it has an AI layer that began in 2016 based around machine learning, that was used for transformations and anomaly detection, as well as a natural language interface to its metadata. This software was trained on user interactions in order to generate better data quality rules. More recently it has incorporated the OpenAI generative AI technology ChatGPT 4. This can do things like populate descriptions, and generate business rule logic based on business descriptions. Since December 2023 this generative AI capability has been part of the core product, including generating SQL queries. Ataccama has built several safeguards around this, for example validating any generated code before applying it. Unlike a public AI, which will just about always give you an answer to a question if that involves making something up (an issue known as “hallucination”), the Ataccama AI layer will simply state that it cannot provide an answer if it fails the internal validation checks.

Customer Quotes

“Other vendors are transactional in their behaviour. With Ataccama there is a genuine belief of shared responsibility of success that we feel within T-Mobile.”

Daniel West, Data Management Lead, T-Mobile

“Ataccama ONE really is a one-stop-solution… that means everyone is capable of fully understanding our data asset inventory to power the next stage of our growth and ambitions.”

Catherine Yoshida, Head of Data Governance, Teranet

What does it do?

Ataccama provides a data catalogue with active metadata support as described earlier. It uses this to ingest metadata from source systems and can manage data pipelines. Its data quality firewall ensures that all data that it deals with is subject to data quality rules that have been established. Its master data management capability means that data records that may be duplicated in source systems are matched and merged into a golden record based on business rules and survivorship rules applied to the sources of data. This is a genuine strength of Ataccama compared to many vendors in the fabric space.

The knowledge graph of Ataccama has been around since 2016 and has several ways of being represented in order to show business users their data landscape in a way that makes sense in business terms. Ataccama can also generate queries to retrieve data as needed from source systems based on its understanding of the data structures stored in its data catalogue. It does this by pushing down queries to the underlying source systems, such as Snowflake or other sources. While it can cache certain regularly used data, it is not a database, and any data that it stores is transient other than master data and metadata. While the vendor does not describe itself as having an optimiser, it does in fact do many things that a database optimiser would do in terms of deciding the best way to satisfy a user query, including a cost-based optimisation approach.

The vendor does not pretend to provide an all-encompassing solution to all data management activities, and has links to a variety of partner technologies such as data visualisation and analysis tools.

Why Should you care?

Ataccama can provide many elements of a modern data fabric architecture. While many vendors have a data catalogue, few have an in-depth background in data quality and master data management that ensures that data served up to business users is accurate, complete and timely. The vendor had seven years of production code incorporating various types of artificial intelligence, and it has a well-thought-out approach to the use of generative AI that is already live within its product rather than just being on a technology roadmap. I have been following Ataccama since soon after its inception in 2008, and its technology stack has always seemed to me very well thought out, which is reflected in its successful implementations in some very demanding customer environments.

The bottom line

Ataccama can provide a significant part of a data fabric architecture. Its modern technology stack has hundreds of customers and its underlying focus on data quality makes it well suited as the basis for serving up data to business users. Ataccama should be seriously considered for customers wanting to implement a data fabric architecture.

Related Company

Connect with Us

Ready to Get Started

Learn how Bloor Research can support your organization’s journey toward a smarter, more secure future."

Connect with us Join Our Community