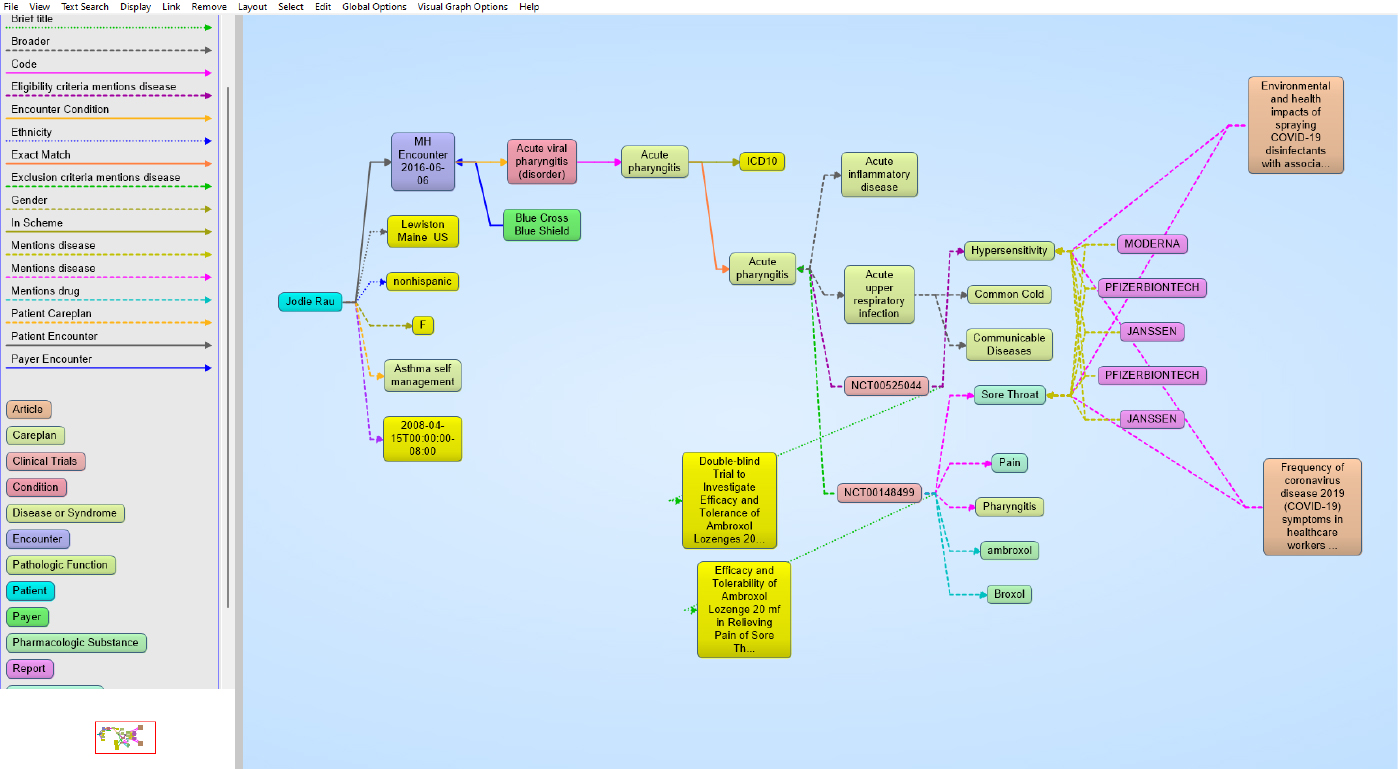

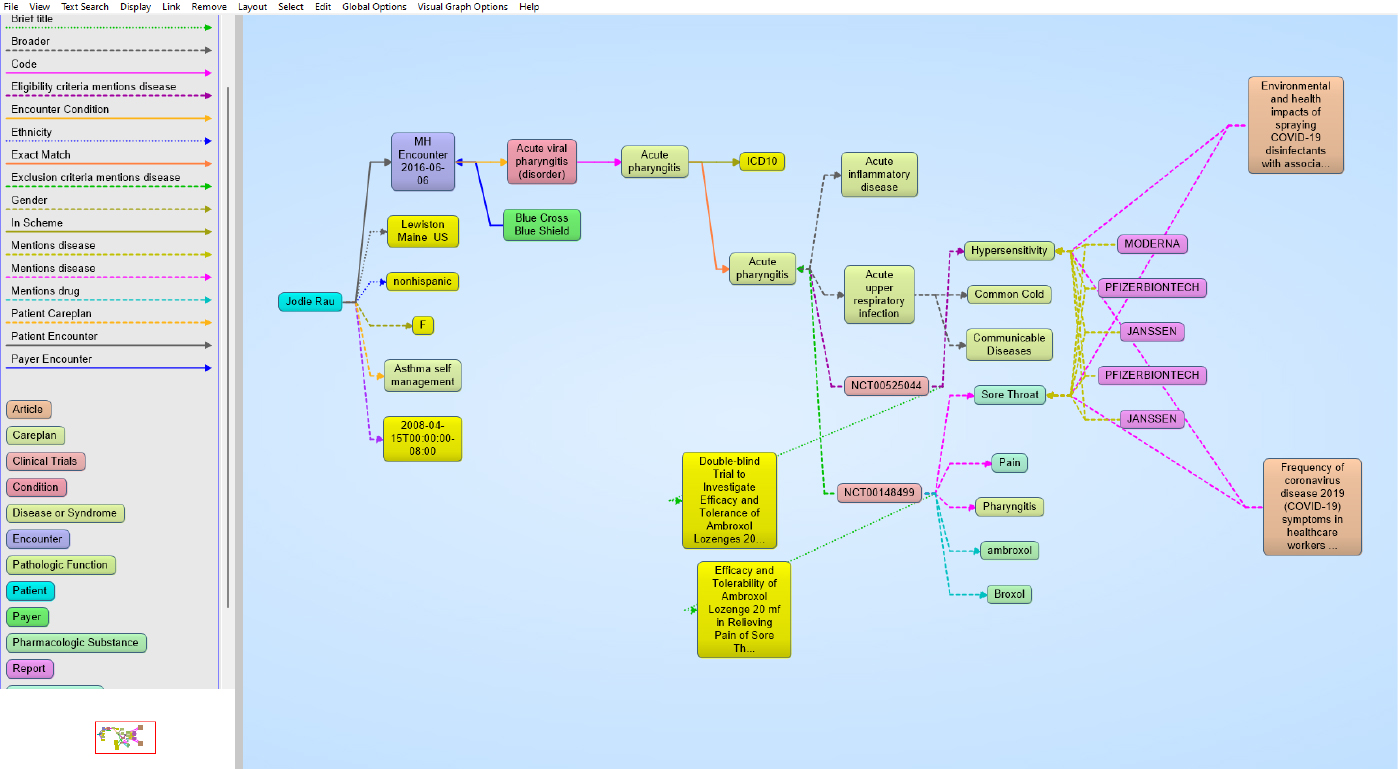

Fig 2 - Entity-event knowledge graph in Franz AllegroGraph

The basic idea behind AllegroGraph is that it will transform your existing enterprise data into triples, which can be thought of as either entities or events, and store them as (part of) a knowledge graph. The initial, and significant, upshot of this is that it makes it much easier to visualise, understand and query your data. This is especially true for complex queries. For example, picking out a single user and obtaining a comprehensive view of their relationships is almost trivial, requiring only a single line of SPARQL. Moreover, Entity-Event Knowledge Graphs (EEKGs) allow you to capture core entities, related events and relevant knowledge bases within a hierarchical tree structure, an example of which is shown in Figure 2. Notably, EEKGs can be built incrementally, starting with a simple model and extending as needed without altering what came before. They also store provenance information and data lineage.

One of the most notable features of AllegroGraph is its capacity for neuro-symbolic AI. The key insight driving this technology is that several types of AI exist, and they each have significant positive and negative traits. LLMs, for instance, are incredibly easy and intuitive to interact with (just look at how they have penetrated the consciousness of the general public) and can provide access to an immense wealth of information. On the other hand, they have already become infamous for their tendency to “hallucinate”, generating false information that often seems like it might be true. Symbolic reasoning, on the other hand, is fundamentally consistent and rigorous, since it is based on logical rules, but at the same time all of those rules have to be created manually (and usually by an expert). This severely limits its ability to scale. Neural networks, and for that matter machine learning in general, excel at pattern recognition but operate on a garbage in/garbage out paradigm and can have difficulty explaining their results, which can impact trust and undermine compliance efforts.

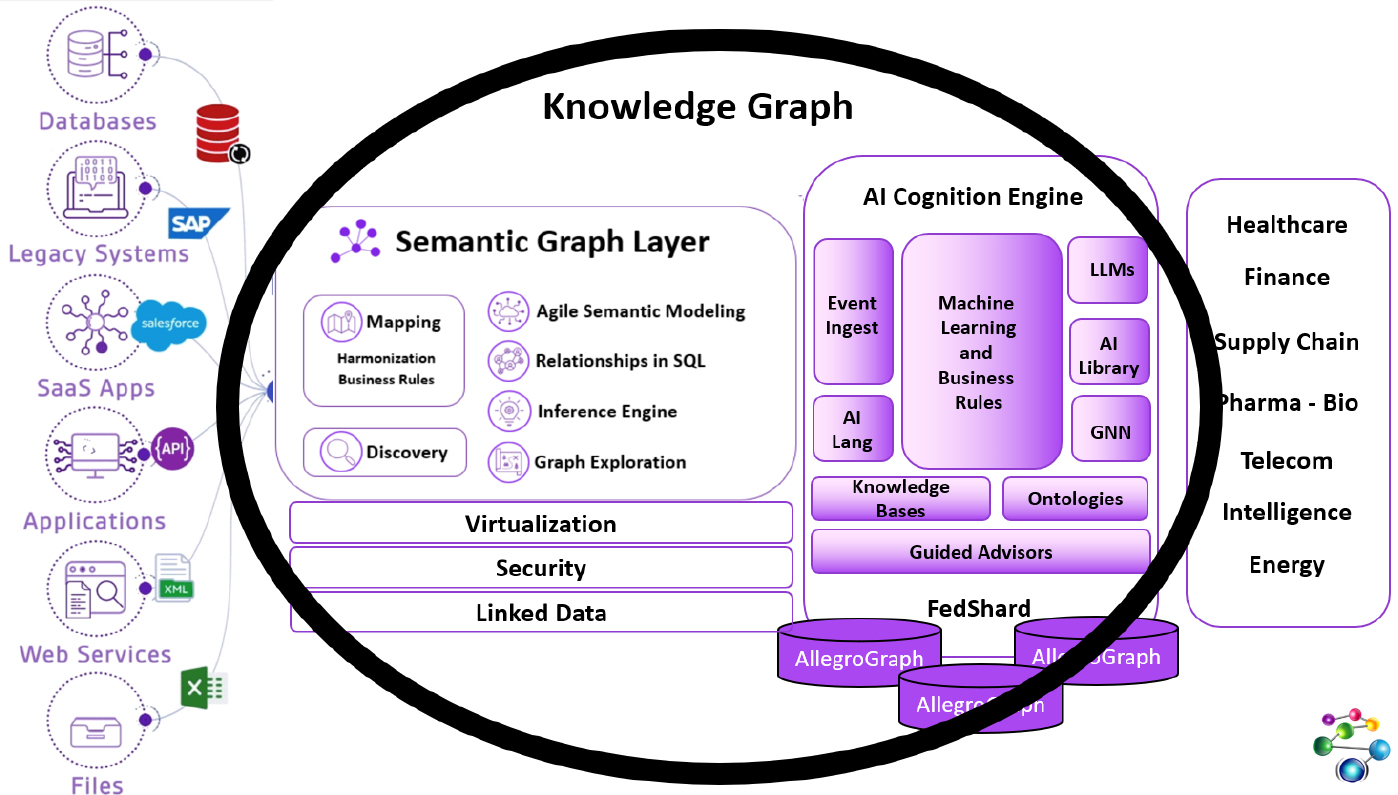

Neuro-symbolic AI, and AllegroGraph by extension, employs these three kinds of AI simultaneously so that these negative and positive traits can compensate for one another. For instance, one implementation might use symbolic reasoning to fact-check the results of an LLM, use an LLM to generate an explanation for the output of a neural network, or use a neural network to feed context into a symbolic model. The ideal result is a virtuous cycle, where each technology is able to use the other two to inform and improve its own behaviour. For AllegroGraph, this all surrounds a central knowledge graph that can then utilise this system to estimate the likelihood of future events. These events can then be inserted into your graph with an attached probability that they will occur. The product also provides various additional pieces of support for AI in general and LLMs in particular. This includes a built-in vector store, RAG (Retrieval-Augmented Generation) functionality, and the ability to dynamically check LLM results against your knowledge graph to help ensure that they are grounded in fact.

In addition to all of the above, AllegroGraph features native, near real-time multi-master replication and management; multi-modal input from RDF, CSV, JSON, JSON Lines and JSON-LD files; built-in document storage (comparable to MongoDB) with support for graph algorithms and semantics; natural language processing (NLP), speech recognition, and textual analysis, including entity extraction; and extensive support for a variety of data science tools.