Experian

Last Updated:

Analyst Coverage: Andy Hayler and Philip Howard

Experian is one of the world’s leading global information services companies. Its roots go back to 1826, but it was not officially founded until 1996 when distinct US and UK-based credit scoring companies were merged to form Experian under the ownership of Great Universal Stores. It was a decade later that the company was de-merged and became independent. It is now headquartered in Ireland and is a public company listed in London. It is part of the FTSE100. Revenues, which are reported in dollars, were $5.37bn in 2021. The company has more than 21,700 employees working in 37 different countries. Apart from Ireland the company has operational headquarters in the UK, the US and Brazil.

Experian is perhaps most well-known for its credit scoring but, more broadly, offers a range of information services. It offers a number of products and solutions spanning analytics (for example, Experian PowerCurve), business information, consumer credit, data quality management (Experian Aperture Data Studio), identity and fraud, marketing services and payment delivery.

Experian Aperture Data Studio

Last Updated: 29th March 2021

Mutable Award: Gold 2021

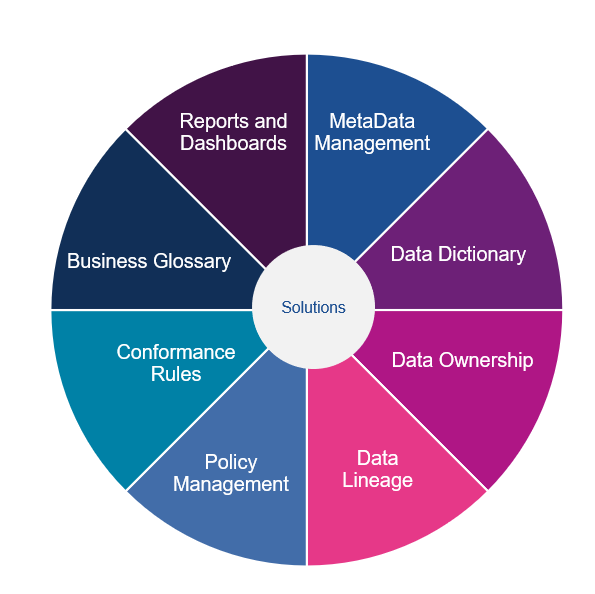

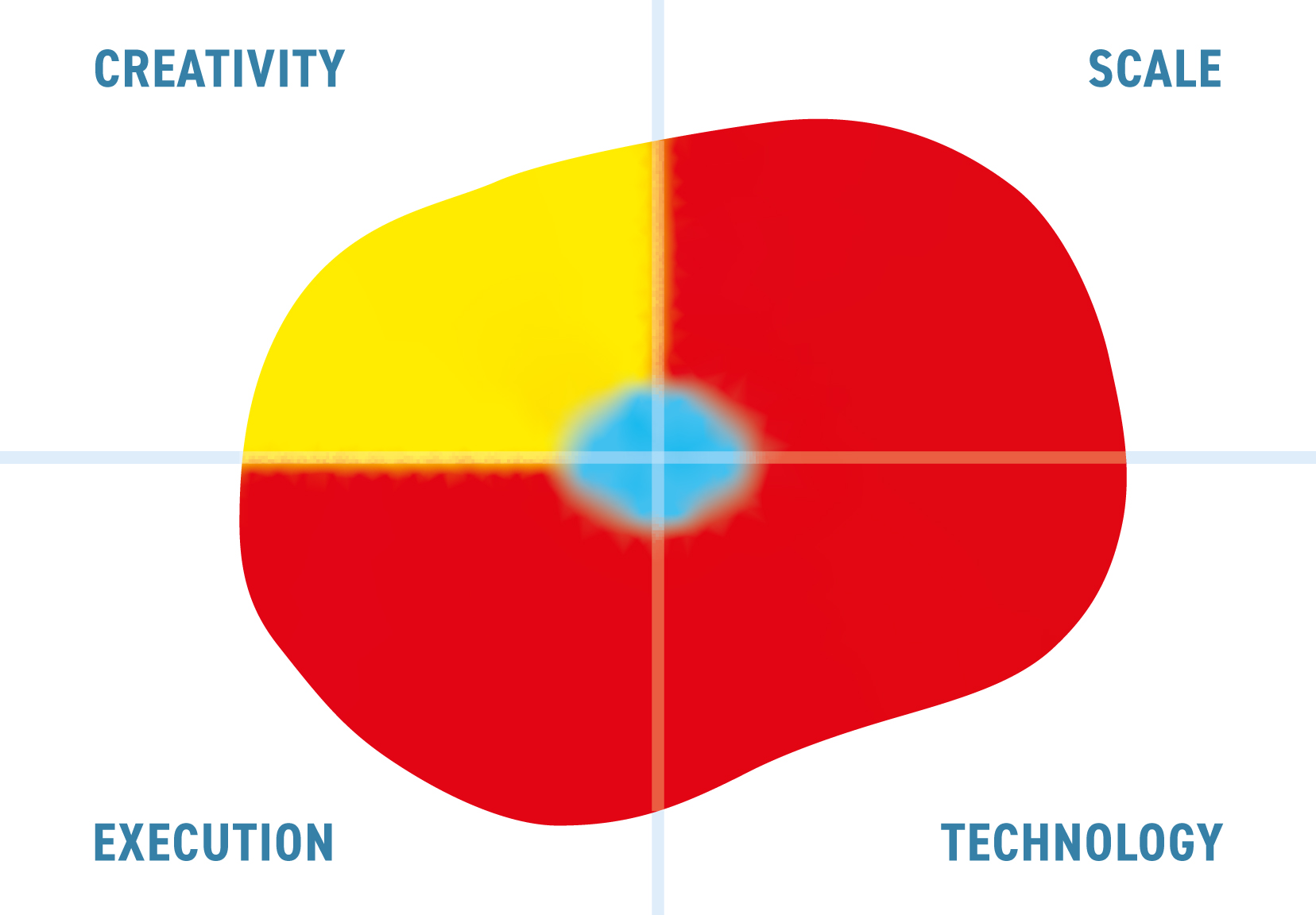

Experian Aperture Data Studio is a data quality and enrichment platform that was first formally released in 2018 to replace Experian Pandora, a product that Experian inherited through the acquisition of X88. The company targets this at the use cases illustrated in Figure 1, which apply across industries and across the globe.

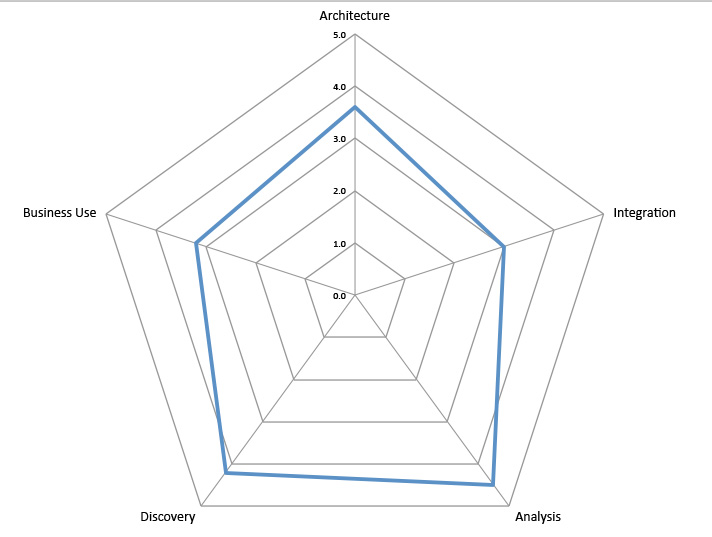

At heart it is a data quality solution that is able to perform in-depth data profiling and data quality analysis. The design elements of the product support all the sorts of functional requirements that are shown in Figure 2. The product is persona-based and, in the latest release (2.2) there is a strong emphasis on supporting collaboration between the people who need to use your data for operational and analytic reasons and those that have a more technical relationship with and understanding of that data.

Customer Quotes

“It is very cool and very powerful. The things you are doing are pretty good, very top notch.”

“I’m impressed. Clean and easy to use, fast install.”

“It is pretty. It is visually appealing. It is inviting.”

“Like Excel on steroids. It is nice. That’s good.”

“The workflows. They are absolutely really good.”

The functions that Aperture Data Studio can perform are detailed in Figure 2. Notable are the enrichment functions that can leverage other aspects of Experian’s data product portfolio for things like geospatial, demographic and credit-based information.

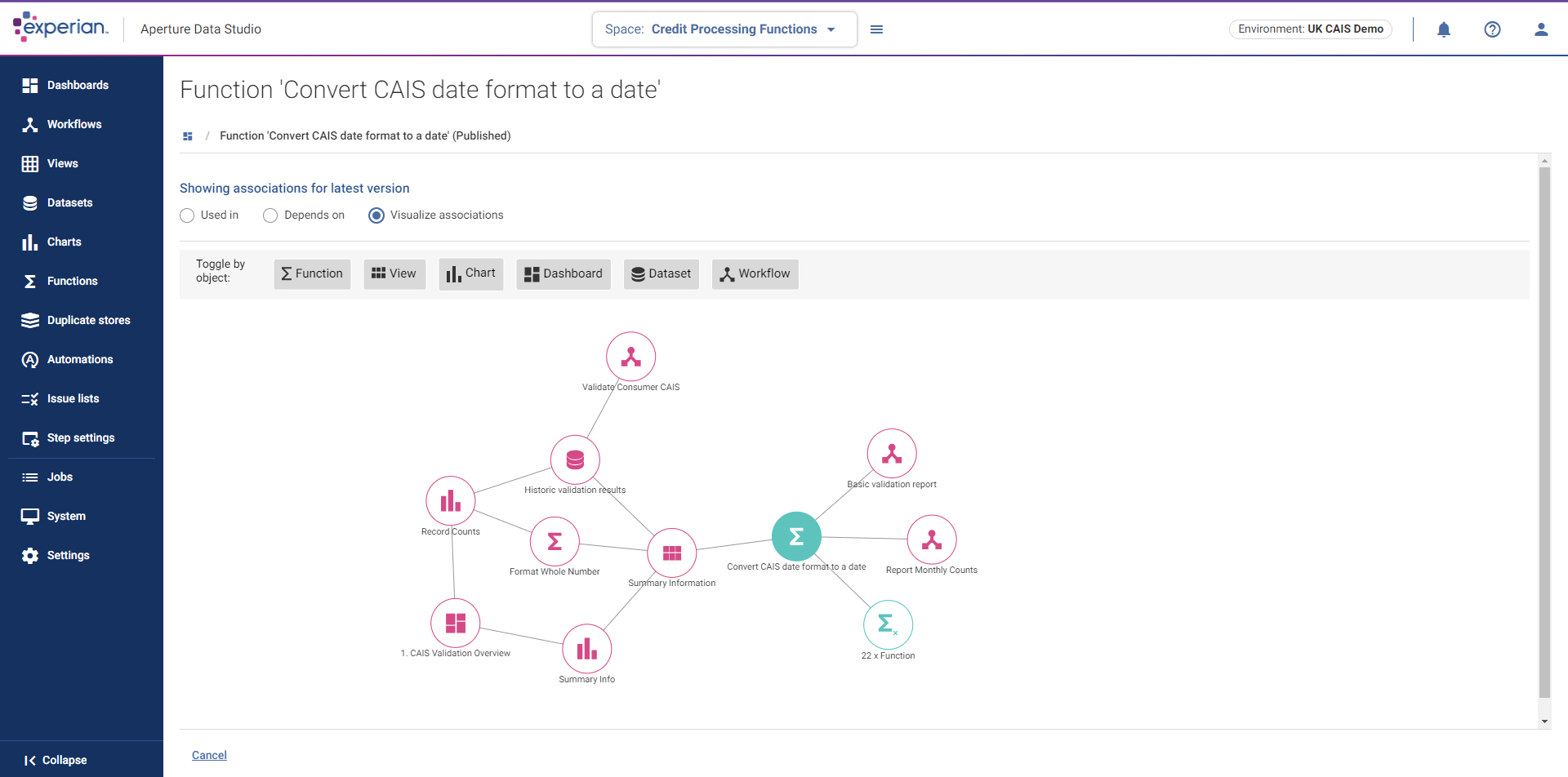

Experian Aperture Data Studio is browser-based, has strong data workflow capabilities and supports user personas. The product has been designed to work within existing technology stacks. Thus, for example, the product’s data preparation capabilities which are largely targeted at operational rather than analytic environments are complementary to both data cataloguing and data governance solutions from third-party vendors such as Collibra and Alation. The software will store relevant metadata defining such integration.

As far as data workflow is concerned, Experian Aperture Data Studio separates data profiling (which is done on all your data, not just samples) from data loading. Thus, data profiling is an explicit workflow step or action during data discovery, and therefore distinct from data manipulation and load processes. Moreover, during profiling you can choose which columns to profile rather than profiling the whole table, and interactive drilldown is supported. Address and email verification can also be defined as steps within a workflow, as can functions you have written using the comprehensive Software Developer’s Kit (SDK). Further, it is worth commenting that you can explore and drill-down from a workflow without having to run a workflow, which is not the case with some other products. This means users can save time by checking the workflow without having to spend time running the file. Other features include the ability to chart results – compare data between runs and undertake version management for workflows. Exhaustive object versioning is a new capability introduced within the latest release and this also supports reversions. Data workflow annotation is supported as well as the ability embed workflows within other workflows.

As mentioned, Experian Aperture Data Studio is persona-based, and there is a particular emphasis on business users and collaboration. In particular, the company there is the concept of “spaces”, where you can define and use functions that are specific to a particular area such as a marketing department. Experian is increasingly adding pre-packaged sets of these functions, for example for credit information quality checking or single customer view templates. Individual functions may come out of the box or you can define your own. As an example, you might have a function that obfuscates credit card numbers. If you want to use something more sophisticated, such as format preserving encryption then there is an SDK that will enable this. Alongside spaces, Experian also supports pre-configured views, consisting of trusted data, that are specific to a particular space or sub-space, such as a “view for marketing” so that the marketing department only sees the data that is relevant to its function.

From a matching perspective an example of duplicate matching rules is illustrated in Figure 3, These are applied when looking for and evaluating potential duplicates. Experian Aperture Data Studio does not apply machine learning to the matching process itself, but it does apply machine learning to matching rules to obtain the best set of those rules.

After investigating the first release of Experian Aperture Data Studio we wrote that “most vendors, in any market, try to meet modern requirements by bolting on extra capabilities. If that is simply a question of adding on a feature here or there, that is not problem. However, when it comes to fundamentals such as self-service and collaboration, these are not the type of services that are amenable to bolting on. Experian is therefore to be applauded for biting the bullet and developing a product suitable for the third decade of this century.” This is the second time that we have reviewed the product since its original release and it continues to build on its early promise.

The Bottom Line

Experian Aperture Data Studio is a modern application for data management and offers the sort of features that businesses require from such a solution. We said something similar in our first review of the product but were forced to qualify our valediction because of the lack of some capabilities. Since then we have happy to remove that qualification: Experian Aperture Data Studio is a product we are happy to recommend.

Experian Data Quality

Last Updated: 14th July 2014

Experian Data Quality provides a number of different product offerings: data capture and validation solutions; data cleansing and standardisation; data matching and deduplication; and the Data Quality Platform.

The data capture and validation solutions, which are often embedded in web-based applications, provide validation capabilities not just for physical addresses but also for email addresses and mobile phone numbers. The company’s data cleansing solution, QAS Batch, is a batch-processing application for cleansing, standardising and enriching data. Thirdly, there is QAS Unify which provides a matching engine and rules-based deduplication.

The Data Quality Platform has three main areas of functionality. Firstly, it provides data profiling and discovery capability. Secondly, it provides prototyping, which allows you to use a graphical rule builder to cleanse and transform your data interactively, the sort of operations that are normally associated with both ETL (extract, transform and load) tools and data cleansing tools, thus creating a 'prototype' of the data you need and generating a specification of what you did to produce it. Thirdly, the Data Quality Platform can be used to instantiate these rules so that the product may be used for data quality and data governance purposes as well as for data migrations. In this last case this is enabled by the fact that the product can generate load files that can be used in conjunction with native application and database loaders. In other words, it enables data migrations without the need for an ETL tool.

Experian has an extensive direct sales force and also a significant partner base. The company’s name change, along with the introduction of the Data Quality Platform, marks a change in direction for the company. Historically it has been a market leader in the name and address space but it wants to move away from party data into the more general data quality market.

Experian Data Quality has some 9,000 users around the world for QAS Batch, QAS Unify and the company’s capture and validation services. The Data Quality Platform has, in fact, been white-labelled from another vendor, and although Experian has only very few customers for this product as yet, there are approaching 500 using the original product. Needless to say, Experian has not only integrated its other products with the Data Quality Platform but is also working to extend the product.

The Data Quality Platform is a Java software product that runs under Windows (client and server), Linux (Red Hat and SUSE) as well as Solaris, AIX and HP-UX. Other Java-compliant platforms are available upon request. The product supports JDBC for database connectivity as well as offering support for both flat files and Microsoft Excel. However it does not provide support for non-relational databases (including NoSQL sources). The product is underpinned by a proprietary correlation database. This stores data based on unique values (each value is stored just once) rather than tables or columns. This means that it uses less disk space than traditional databases, as well as enabling unique functionality and improving performance. As an indication of the latter, the Data Quality Platform supports as many as two billion records on-screen with full browsing and filtering capabilities. Another advantage that derives from having its own database is that there is no need to embed a third party database engine within it, so there are no bugs, compatibility, administration or performance issues related to that. As far as functionality is concerned, the product can distinguish all (sub)types of data and one particularly interesting feature is the ability to assign monetary weightings during on-going monitoring. This is useful for justifying and prioritising remediation.

Another major feature is that it supports prototyping of the sort of business rules that are used within a data quality context or transformation rules within a data migration environment. In the latter case the product supports the generation of ETL (extract, transform and load) specifications and can be used as a standalone solution for data migration. The big advantage for the Experian technology set is that you don’t need a separate ETL tool with its associated staff, infrastructure and project timescales.

More generally, the Data Quality Platform supports full cleansing, enrichment and de-duplication using reference data, patterns, synonyms, fuzzy matching and parsing via over 300 native functions and any number of customer-specified functions. Functions can also be called by external applications via the REST API, allowing enterprise-wide re-use. It is also very flexible with respect to both data and metadata and supports customisations such as the construction of a business glossary associated with data assets. The product lacks support for external authentication mechanisms such as Active Directory or LDAP, using its own role-base security instead.

Experian Data Quality has a significant services business, both in the UK and USA. As an example, the business is worth around £3m in revenues in the UK. In addition to training, support and so forth, this division offers integration services for the company’s products, data strategy services, and what might be called bureau services for off-site, one-off cleansing initiatives.

Experian Powercurve

Last Updated: 17th January 2020

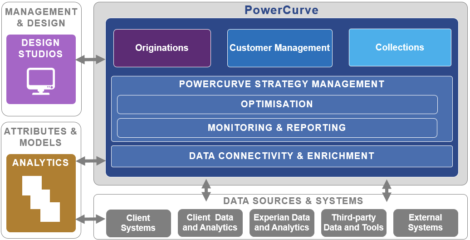

Experian PowerCurve is a decision management platform upon which the company delivers a variety of customer-centric and analytically driven decisioning solutions, as illustrated in Figure 1.

The PowerCurve platform makes use of a common component called PowerCurve Strategy Management and there are three core components: Design Studio, which provides the user interface and a common design environment for decision strategies and simulation; Decision Agent, which is the decisioning engine; and a common repository (not shown) for sharing reusable resources such as previously used strategies and so forth. It has an open architecture built on HDFS and Apache Spark that supports plug-ins from third party machine learning environments. There is also a core element called the Analytic Component Extension (ACE) framework, discussed below.

Additional capabilities are provided within the PowerCurve platform and associated solutions. For example, there is a Virtual Assistant to support customer engagement, and there are various pre-built templates to support particular functions.

Customer Quotes

“Today Alfa Bank’s employees are actively using PowerCurve to assess applications for all unsecured cash loans, credit cards, and refinancing products. We have substantially increased the application processing speed and decisioning accuracy, while maintaining the credit portfolio quality. Due to its flexibility, PowerCurve has made it possible to implement several new products in a short timeframe.”

Alfa Bank

Experian has recognised that there are four trends within the market related to operationalising analytics: a demand to use machine/deep learning models for predictive functions; issues around the operationalisation of those models; requirements around the monitoring, management and replacement (or retraining) of models; and governance issues with respect to both explainability and bias. As a result, Experian is in the process of enhancing PowerCurve, which was originally released in 2011, to support these capabilities. For example, it has introduced the ACE Framework. This provides an environment for plugging in predictive models developed within third party environments. Currently ACE supports PMML (predictive modelling mark-up language) based models as well as those developed using R or Python. H2O support is forthcoming and other plug-ins will be introduced based on customer demand.

However, there are some elements of what Bloor Research calls AnalyticOps that are not yet fully implemented, though Experian is by no means alone in this. For example, there are facilities for model auditing today, but it is not an easy and simple process. Experian plans to enhance both its explainability and model governance capabilities, adding model performance monitoring. While there are facilities for automatically deploying and testing models into production there are no workflow/approvals processes to complement this yet. The company is considering whether to implement such processes within PowerCurve or to leave this to third party tools.

AnalyticOps concerns the operationalisation, monitoring, improvement, management and governance of augmented intelligent models. As such models become more and more widely deployed, AnalyticOps capabilities will become more and more vital. Where most companies today have a handful of deployed models, if that, we expect large organisations to have thousands or tens of thousands of models to deploy and manage in the near future.

In practice, there are very few vendors that can address all, or even some, of the AnalyticOps requirements of a modern data science environment. Experian PowerCurve is one of these: it has a business user-friendly design environment enabling business users to easily include models in decision strategies and is in process of adding and enhancing capabilities for identifying bias, model explainability, model performance monitoring and model management. In this context it is noteworthy that the company supports a methodology known as FACT for fairness, accuracy, (responsibility to the) customer, and transparency (including both explainability and auditability). By way of contrast, most vendors in this space have no model management capabilities at all, few have any capabilities with respect to bias and explainability is by no means common.

The Bottom Line

However, PowerCurve has not historically been marketed as a generic decisioning platform but only as providing underlying capabilities to support the various decision management solutions offered by Experian. While the latter remains a valid strategy, it means that PowerCurve remains a well-kept secret. Given that many of the well-known data science platforms cannot match the capabilities of PowerCurve when it comes to AnalyticOps – at least at this time – we would like to see PowerCurve marketed more aggressively to a wider market.

Experian’s Data Governance Offering

Last Updated: 27th October 2023

Experian is a large and long-established vendor of data quality solutions but until now has not had a data governance offering. This has changed recently with its acquisition of UK-based software vendor IntoZetta. The IntoZetta software has a full range of data governance functionality and has a reputation for an easy-to-use interface for business users. It has been deployed in over thirty customers including Wessex Water, Weetabix, Notting Hill Genesis and part of the NHS. The data governance tool will in time be fully integrated into the Experian Aperture Data Studio suite, expanding the capabilities of that established data quality software.

Customer Quotes

“IntoZetta provided exceptional expertise, both in terms of resources and tools, to enable a successful and complex data migration on one of the largest Microsoft Dynamics implementations in Europe.”

Chris Lalley, Programme Director at Clarion Housing Group

At the heart of the offering is a data dictionary which stores information about business data entities and data flows. Technical metadata can be ingested from relational database catalogs and other sources such as Sharepoint, and used as a basis for developing a catalogue of business terms such as “customer” and “asset” and their attributes. These business entities can be assigned data owners and be classified as desired, for example to note whether the data contains personally identifiable information that may require greater care than other data. The dictionary allows users and roles to be defined, such as data stewards, data owners etc and have rules developed around these roles. Data profiling is carried out to further flesh out the metadata, and data quality targets can be established.

There is an attractive visual interface to show the data at an overview level. Data flows can be defined and displayed in a visual form too, with basic data lineage shown. Customers can, for example, see all things associated with an entity like “customer”, such as related data, who is the assigned owner for it etc. The tool can interface with specialist data lineage tools for customers who need this. A link exists to Octopai and one is planned for Manta. The technology has a range of dashboards and reporting capabilities. For example, data quality progress can be monitored, as can the coverage of rules and ownership. There is a high-level report that neatly captures overall progress of a data governance initiative at a high level, drawing on the underlying information in the data dictionary. The user interface is intuitive and aimed squarely at business rather than technology staff.

The IntoZetta technology already has some linkage to the Experian Aperture Data Studio suite, so for example some data quality functionality from Data Studio can be invoked from within IntoZetta and the results returned into the IntoZetta Data Dictionary. In addition, Experian Aperture Data Studio can push profile metrics out into IntoZetta, as Experian can with its data governance solution partners. Over the next year or so it is likely that the integration will go deeper.

Currently, the data governance market leaders at the high end of the market have a top-down approach to corporate data governance, as seen in tools like Collibra and Alation. While Experian partners, and will continue to partner, with several of these solution providers. It is likely that Experian will position their data governance tool so as to be suitable for a more bottom-up approach. This may be more suitable for projects within business lines or departments. It will also be well suited to small and medium-sized entities. This would mirror the Experian approach to other software such as its data quality suite, and so would fit easily into its existing sales and marketing channels.

The Bottom Line

Experian is a large and long-established data quality vendor, and given the linkage between data governance and data quality it makes sense for Experian to bring out some sort of data governance offering. By purchasing IntoZetta they can build on an established product that has proven its worth in a number of customers and integrate it into their existing Aperture Data Studio product line. Customers looking for a business-focused data governance tool that is aimed at either SMEs or is suitable for departmental data governance solutions should take a look at the new Experian offering.

Experian’s Data Quality Investments

Last Updated: 22nd February 2024

Mutable Award: Gold 2024

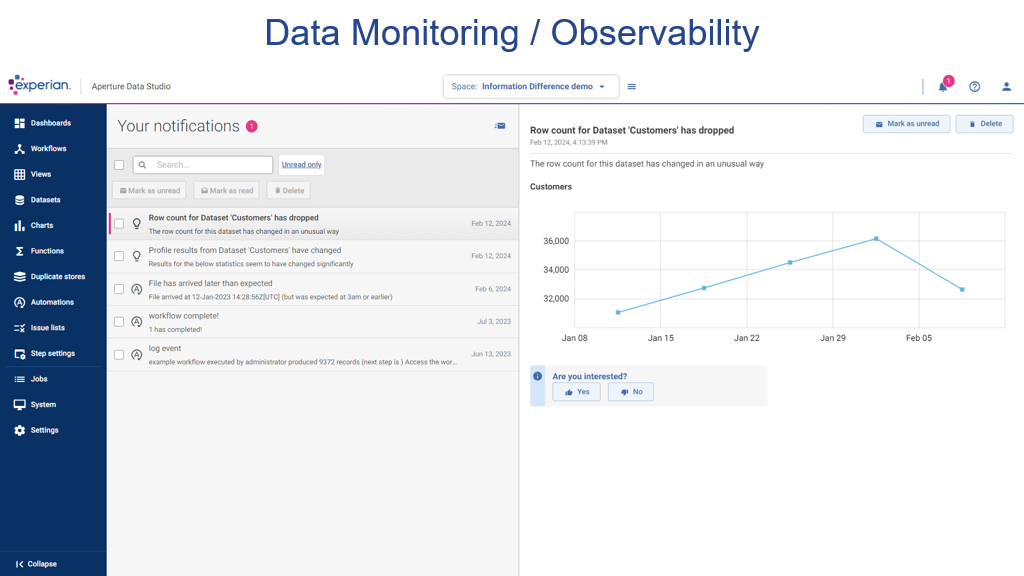

Experian Data Quality Suite is a full-function tool for data quality, from data profiling through to data cleaning, data matching, data enrichment and data monitoring. It recently expanded into data governance through its acquisition of IntoZetta in September 2023, which will be rebranded as Aperture Governance Studio as part of its Aperture software suite.

Experian’s data quality solutions have been widely deployed for customer name address validation. Indeed the company has been the market leader in this for some time. Over the last few years, Experian has significantly modernised and expanded its data quality software, with plenty of visualisation aids to ease the job of understanding and improving the quality of data. Recent new features include the automatic detection of sensitive data, visual associations between data, automated rule creation, and smarter data profiling.

Customer Quotes

“The more we use the tool the more possibilities for its use present themselves.”

Andreea Constantin, Data Governance Analyst, Saga

“We’ve managed to improve our customer data, reduce costs and provide a better customer experience – all while staying way ahead of the ROI. We’re thrilled with the first class products and services that have been provided; Experian Data Quality is clearly an industry leader.”

Steve Tryon, SVP of Logistics, Overstock.com

“By implementing a data quality strategy and using these tools in conjunction, CDLE has a more streamlined and efficient claimant process, explained Johnson. “When a person files a claim, we have the ability to verify in real time, which results in a faster process time”.”

Jay Johnson, Employer Services, CDLE

The Experian data quality software has a broad range of functionality. It can profile data to carry out statistical analysis of a dataset to provide summary information (such as the number of records and the structure of the data). The tool can also discover relationships between data and check on anomalies in the content. For example, profiling would detect whether a field called “phone number” had any records that did not match the normal format for a phone number or had missing data. The software can set up data quality rules that can be used to validate data, such as checking for valid social security numbers of valid postal codes. It can detect likely duplicates and carry out merging and matching of such fields, for example, merging files with common header names.

The software can enrich data too. For example, you might have a company with an HQ at a business address. As well as just validating the address, the software can add additional information that it knows about that business. At a basic level that might be something like adding a missing postal code, but it can be much more elaborate. Experian in particular has a rich vein of business data in its wider business, so could add information about the company such as the number of employees.

Data quality is a long-standing issue in business, with various surveys consistently showing that only around 30% of executives fully trust their own data. Poor data quality can have serious consequences, from the inconvenience of misdirected deliveries or invoices through to significant regulatory or compliance fines.

GDPR non-compliance can result in fines of 20 million euros or 4% of annual turnover in the EU, and there are numerous other regulatory bodies in the USA and elsewhere that apply fines for poor data quality in various industries such as finance (for example the MiFID II regulations) and pharmaceuticals (CGMP rules in the USA).

Another recent trend is likely to raise data quality higher up the corporate executive agenda. Companies, inspired by the success of ChatGPT and others in the public consciousness, are widely deploying generative AI technology in assorted industries. However, they are increasingly finding that they need to train such large language models on their own data (and operate private rather than public versions of such AIs for security reasons). It turns out that the effectiveness of such models is highly dependent on the quality of the data it is trained on, echoing the old “garbage in, garbage out” rule of computing. It is therefore important to assess and usually improve the quality of corporate data before a broad roll-out of generative AI technology.

The bottom line

Experian has a well-proven and comprehensive set of capabilities for data quality. It has expanded significantly in functionality in recent times, with its data governance capability the latest manifestation of this. With the excitement about deploying generative AI in corporations turning out to be heavily dependent on good data quality, vendors such as Experian should see renewed interest in their technology.